Load Balancing REST, HTTP and Web Services

Membrane API Gateway can work as loadbalancers and distribute calls to several endpoints. The loadbalancing will provide a performance gain if a lot of clients are accessing a service concurrently. Besides that the router also offers failover for web services.

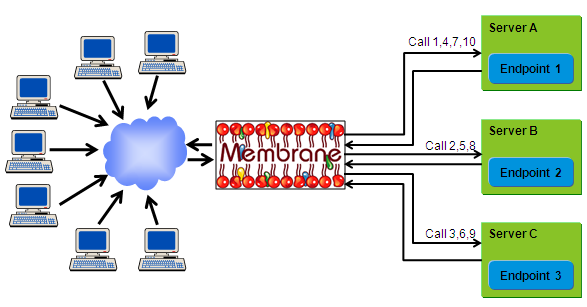

Figure1:

The Architecture of the Loadbalancer

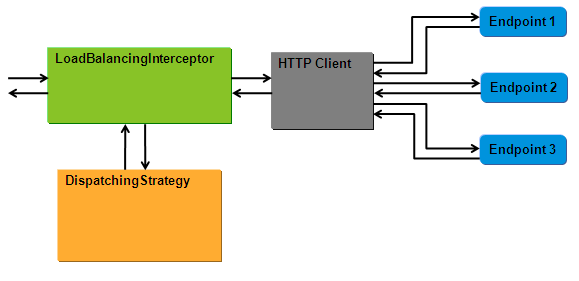

A loadbalancer consists of two components: the LoadBalancingInterceptor and a DispatchingStrategy. The LoadBalancingInterceptor asks the DispatchingStrategy to what endpoint the request message should be sent.

Figure2:

Membrane comes with a round robin and a by thread strategy that can be used together with the LoadBalancingInterceptor. You can also plug in your own implementation of the DispatchingStrategy interface that provides a different balancing behavior.

Configuration

You can configure the loadbalancer in the file:

<<Membrane installation dir>>/conf/proxies.xml

The following listening shows a bean definition for the LoadBalancingInterceptor. Its nodes contain a list of the endpoints the load should be balanced to. The DispatchingStrategy property is configured with an anonymous instance of the RoundRobinStrategy.

<serviceProxy name="Balancer" port="8080"> <path>/service</path> <balancer> <clusters> <cluster> <node host="www.thomas-bayer.com"/> <node host="www.thomas-bayer.com"/> </cluster> </clusters> <roundRobinStrategy/> </balancer> </serviceProxy>

RoundRobinStrategy

This DispatchingStrategy distributes the calls evenly between the endpoints. See figure 1 how ten calls are distributed between three endpoints.

ByThreadStrategy

Some endpoints can process only a limited number of calls concurrently. A service that wraps a library that is not thread save for instance can only serve one client at a time. Then you should limit the number of threads to one. The ByThreadStrategy can limit the number of concurrent calls per service to the value specified for the maxNumberOfThreadsPerEndpointProperty.

<serviceProxy name="Balancer" port="8080"> <path>/service</path> <balancer> <clusters> <cluster> <node host="www.thomas-bayer.com"/> <node host="www.thomas-bayer.com"/> </cluster> </clusters> <byThreadStrategy maxNumberOfThreadsPerEndpoint="10" retryTimeOnBusy="1000"/> </balancer> </serviceProxy>